Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Last substack for 2025 - may 2026 bring better tidings. Credit and/or blame to David Gerard for starting this.)

Neom update:

Description:

A Lego set on the clearence shelf. It’s an offroad truck that has Neom badges on it.

So Neom is one of those zany planned city ideas right?

Why… why do they need a racing team? Why does the racing team need a lego set? Who is buying it for 27 dollars? (Well apparently the answer to that last question is nobody).

Anyway a random thought I had about these sorts of silly city projects. Their website says:

NEOM is the building the foundations for a new future - unconstrained by legacy city infrastructure, powered by renewable energy and prioritizing the conservation of nature. We are committed to developing the region to the highest standards of sustainability and livability.

(emphasis mine)

This is a weird worldview. The idea that you can sweep existing problems under the rug and start new with a blank slate.

No pollution (but don’t ask about how Saudi Arabia makes money), no existing costly “legacy” infrastructure to maintain (but don’t ask about how those other cities are getting along), no undesirables (but don’t worry they’re “complying with international standards for resettlement practices”*).

They assumes there’s some external means of supplying money, day workers, solar panels, fuel, food, etc. As long as their potemkin village is “sustainable” and “diverse” on the first order they don’t have to think about that. Out of sight, out of mind. Pretty similar to the libertarian citadel fever dreams in a way.

* Actual quote from their website eurrgh, which even itself looks like a lie

“Why… why do they need a racing team? Why does the racing team need a lego set? Who is buying it for 27 dollars? (Well apparently the answer to that last question is nobody).”

Apparently NEOM is sponsoring some McLaren Formula E teams. (Formula E being electric). Google Pixel, Tumi luggage, and the UK Ministry of Defence are other sponsors, but NEOM seems to be the major sponsor.

I assume the market for these is not so much NEOM fans but rather McLaren fans.

As to why NEOM is sponsoring it, I think it’s a bit of Saudi boosterism or techwashing to help MBS move past the whole bone saw thing.

Dubai famously doesn’t have a sewage pipe system, human waste is loaded onto tanker trucks that spend hours waiting to offload it in the only sewage treatment plant available.

Dubai is in the United Arab Emirates, not Saudi Arabia.

The Soylent meal replacement thing apparently forgot some important micro nutrients.

NEOM is the building the foundations for a new future - unconstrained by legacy city infrastructure, powered by renewable energy and prioritizing the conservation of nature. We are committed to developing the region to the highest standards of sustainability and livability.

lol, this is saudi, they found a way to make half of their water supply to riyadh nonrenewable

The best way of conserving nature is to build a ginormous wall 110 miles long and 1,600 feet high that utterly destroys wildlife’s ability to traverse territory it has been traversing for eons. It is known.

I hear ya!

I guess Neom is what happens when a billionaire in the desert gets infected by the seastedding brainworms.

The developer of an LLM image description service for the fediverse has (temporarily?) turned it off due to concerns from a blind person.

Link to the thread in question

Good for them

Good for them. Not quite abandoning the project and deleting it, but its a good move from them nonetheless.

https://github.com/leanprover/lean4/blob/master/.claude/CLAUDE.md

Imagine if you had to tell people “now remember to actually look at the code before changing it.” – but I’m sure LLMs will replace us any day now.

Also lol this sounds frustrating:

Update prompting when the user is frustrated: If the user expresses frustration with you, stop and ask them to help update this .claude/CLAUDE.md file with missing guidance.

Edit: I might be misreading this but is this signs of someone working on an LLM driven release process? https://github.com/leanprover/lean4/blob/master/.claude/commands/release.md ??

Important Notes: NEVER merge PRs autonomously - always wait for the user to merge PRs themselves

So many CRITICAL and MANDATORY steps in the release instruction file. As it always is with AI, if it doesn’t work, just use more forceful language and capital letters. One more CRITICAL bullet point bro, that’ll fix everything.

Sadly, I am not too surprised by the developers of Lean turning towards AI. The AI people have been quite interested in Lean for a while now since they think it is a useful tool to have AIs do math (and math = smart, you know).

Great. Now we’ll need to preserve low-background-radiation computer-verified proofs.

The whole culture of writing “system prompts” seems utterly a cargo-cult to me. Like if the ST: Voyager episode “Tuvix” was instead about Lt. Barclay and Picard accidentally getting combined in the transporter, and the resulting sadboy Barcard spent the rest of his existence neurotically shouting his intricately detailed demands at the holodeck in an authoritative British tone.

If inference is all about taking derivatives in a vector space, surely there should be some marginally more deterministic method for constraining those vectors that could be readily proceduralized, instead of apparent subject-matter experts being reduced to wheedling with an imaginary friend. But I have been repeatedly assured by sane, sober experts that it is just simply is not so

One of my old teachers would send documents to the class with various pieces of information. They were a few years away from retirement and never really got word processors. They would start by putting important stuff in bold. But some important things were more important than others. They got put in bold all caps. Sometimes, information was so critical it got put in bold, underline, all caps and red font colour. At the time we made fun of the teacher, but I don’t think I could blame them. They were doing the best they could with the knowledge of the tools they had at the time.

Now, in the files linked above I saw the word “never” in all caps, bold all caps, in italics and in a normal font. Apparently, one step in the process is mandatory. Are the others optional? This is supposed to be a procedure to be followed to the letter with each step being there for a reason. These are supposed computer-savvy people

CRITICAL RULE: You can ONLY run

release_steps.pyfor a repository ifrelease_checklist.pyexplicitly says to do so […] The checklist output will say “Runscript/release_steps.py {version} {repo_name}to create it”I’ll admit I did not read the scripts in detail but this is a solved problem. The solution is a script with structured output as part of a pipeline. Why give up one of the only good thing computers can do: executing a well-defined task in a deterministic way. Reading this is so exhausting…

It reminds me of the bizzare and ill-omened rituals my ancestors used to start a weed eater.

Yes, they are trying to automate releases.

sidenote: I don’t like how taking an approach of mediocre software engineering to mathematics is becoming more popular. Update your dependency (whose code you never read) to v0.4.5 for bug fixes! Why was it incorrect in the first place? Anyway, this blog post sets some good rules for reviewing computer proofs. The second-to-last comment tries to argue npm-ification is good actually. I can’t tell if satire

I don’t like how taking an approach of mediocre software engineering to mathematics is becoming more popular

would you be willing to elaborate on this? i am just curious because i took the opposite approach (started as a mathematician now i write bad python scripts)

The flipside to that quote is that computer programs are useful tools for mathematicians. See the mersenne prime search, OEIS and its search engine, The L-function database, as well as the various python scripts and agda, rocq, lean proofs written to solve specific problems within papers. However, not everything is perfect: throwing more compute at the problem is a bad solution in general; the stereotypical python script hacked together to serve only a purpose has one-letter variable names and redundant expressions, making it hard to review. Throw in the vibe coding over it all, and that’s pretty much the extent of what I mean.

I apologize if anything is confusing, I’m not great at communication. I also have yet to apply to a mathematics uni, so maybe this is all manageable in practice.

no need to apologize, i understand what you mean. my experience with mathematicians has been that this is really common. even the theoretical computer scientists (the “lemma, theorem, proof” kind) i have met do this kind of bullshit when they finally decide to write a line of code. hell, their pseudocode is often baffling — if you are literally unable to run the code through a machine, maybe focus on how it comes across to a human reader? nah, it’s more important that i believe it is technically correct and that no one else is able to verify it.

Happy new year everybody. They want to ban fireworks here next year so people set fires to some parts of Dutch cities.

Unrelated to that, let 2026 be the year of the butlerian jihad.

Meanwhile in the US…

The mods were heavily downvoted and critiqued for pulling the rug from under the community as well as for parallelly modding pro-A.I.-relationship-subs. One mod admitted:

“(I do mod on r/aipartners, which is not a pro-sub. Anyone who posts there should expect debate, pushback, or criticism on what you post, as that is allowed, but it doesn’t allow personal attacks or blanket comments, which applies to both pro and anti AI members. Calling people delusional wouldn’t be allowed in the same way saying that ‘all men are X’ or whatever wouldn’t. It’s focused more on a sociological issues, and we try to keep it from devolving into attacks.)”

A user, heavily upvoted, replied:

You’re a fucking mod on ai partners? Are you fucking kidding me?

It goes on and on like this: As of now, the posting has amassed 343 comments. Mostly, it’s angry subscribers of the sub, while a few users from pro-A.I.-subreddits keep praising the mods. Most of the users agree that brigading has to stop, but don’t understand why that means that a sub called COGSUCKERS should suddenly be neutral to or accepting of LLM-relationships. Bear in mind that the subreddit r/aipartners, for which one of the mods also mods, does not allow to call such relationships “delusional”. The most upvoted comments in this shitstorm:

“idk, some pro schmuck decided we were hating too hard 💀 i miss the days shitposting about the egg” https://www.reddit.com/r/cogsuckers/comments/1pxgyod/comment/nwb159k/

That was quite the rabbit-hole.

The whole time I’m sitting here thinking, “do these mods realize they’re moderating a subreddit called ‘cogsuckers’?”

There are some comments speculating that some pro-AI people try to infiltrate anti-AI subreddits by applying for moderator positions and then shutting those subreddits down. I think this is the most reasonable explanation for why the mods of “cogsuckers” of all places are sealions for pro-AI arguments. (In the more recent posts in that subreddit, I recognized many usernames who were prominent mods in pro-AI subreddits.)

I don’t understand what they gain from shutting down subreddits of all things. Do they really think that using these scummy tactics will somehow result in more positive opinions towards AI? Or are they trying the fascist gambit hoping that they will have so much power that public opinion won’t matter anymore? They aren’t exactly billionaires buying out media networks.

Do they really think that using these scummy tactics will somehow result in more positive opinions towards AI?

Well, where would someone complain about their scummy tactics? All the places where they could have were shut down.

New believers spreading the “good news” eh?

When you go so hard you Hadamard

A rival gang of “AI” “researchers” dare to make fun of Big Yud’s latest book and the LW crowd are Not Happy

Link to takedown: https://www.mechanize.work/blog/unfalsifiable-stories-of-doom/ (hearbreaking : the worst people you know made some good points)

When we say Y&S’s arguments are theological, we don’t just mean they sound religious. Nor are we using “theological” to simply mean “wrong”. For example, we would not call belief in a flat Earth theological. That’s because, although this belief is clearly false, it still stems from empirical observations (however misinterpreted).

What we mean is that Y&S’s methods resemble theology in both structure and approach. Their work is fundamentally untestable. They develop extensive theories about nonexistent, idealized, ultrapowerful beings. They support these theories with long chains of abstract reasoning rather than empirical observation. They rarely define their concepts precisely, opting to explain them through allegorical stories and metaphors whose meaning is ambiguous.

Their arguments, moreover, are employed in service of an eschatological conclusion. They present a stark binary choice: either we achieve alignment or face total extinction. In their view, there’s no room for partial solutions, or muddling through. The ordinary methods of dealing with technological safety, like continuous iteration and testing, are utterly unable to solve this challenge. There is a sharp line separating the “before” and “after”: once superintelligent AI is created, our doom will be decided.

LW announcement, check out the karma scores! https://www.lesswrong.com/posts/Bu3dhPxw6E8enRGMC/stephen-mcaleese-s-shortform?commentId=BkNBuHoLw5JXjftCP

Update an LessWrong attempts to debunk the piece with inline comments here

https://www.lesswrong.com/posts/i6sBAT4SPCJnBPKPJ/mechanize-work-s-essay-on-unfalsifiable-doom

Leading to such hilarious howlers as

Then solving alignment could be no easier than preventing the Germans from endorsing the Nazi ideology and commiting genocide.

Ummm pretty sure engaging in a new world war and getting their country bombed to pieces was not on most German’s agenda. A small group of ideologues managed to sieze complete control of the state, and did their very best to prevent widespread knowledge of the Holocaust from getting out. At the same time they used the power of the state to ruthlessly supress any opposition.

rejecting Yudkowsky-Soares’ arguments would require that ultrapowerful beings are either theoretically impossible (which is highly unlikely)

ohai begging the question

I clicked through too much and ended up finding this. Congrats to jdp for getting onto my radar, I suppose. Are LLMs bad for humans? Maybe. Are LLMs secretly creating a (mind-)virus without telling humans? That’s a helluva question, you should share your drugs with me while we talk about it.

A few comments…

We want to engage with these critics, but there is no standard argument to respond to, no single text that unifies the AI safety community.

Yeah, Eliezer had a solid decade and a half to develop a presence in academic literature. Nick Bostrom at least sort of tried to formalize some of the arguments but didn’t really succeed. I don’t think they could have succeeded, given how speculative their stuff is, but if they had, review papers could have tried to consolidate them and then people could actually respond to the arguments fully. (We all know how Eliezer loves to complain about people not responding to his full set of arguments.)

Apart from a few brief mentions of real-world examples of LLMs acting unstable, like the case of Sydney Bing, the online appendix contains what seems to be the closest thing Y&S present to an empirical argument for their central thesis.

But in fact, none of these lines of evidence support their theory. All of these behaviors are distinctly human, not alien.

Even with the extent that Anthropic’s “research” tends to be rigged scenarios acting as marketing hype without peer review or academic levels of quality, at the very least they (usually) involve actual AI systems that actually exist. It is pretty absurd the extent to which Eliezer has ignored everything about how LLMs actually work (or even hypothetically might work with major foundational developments) in favor of repeating the same scenario he came up with in the mid 2000s. Or even tried mathematical analyses of what classes of problems are computationally tractable to a smart enough entity and which remain computationally intractable (titotal has written some blog posts about this with material science, tldr, even if magic nanotech was possible, an AGI would need lots of experimentation and can’t just figure it out with simulations. Or the lesswrong post explaining how chaos theory and slight imperfections in measurement makes a game of pinball unpredictable past a few ricochets. )

The lesswrong responses are stubborn as always.

That’s because we aren’t in the superintelligent regime yet.

Y’all aren’t beating the theology allegations.

Yeah, Eliezer had a solid decade and a half to develop a presence in academic literature. Nick Bostrom at least sort of tried to formalize some of the arguments but didn’t really succeed.

(Guy in hot dog suit) “We’re all looking for the person who didn’t do this!”

internet comment etiquette with erik just got off YT probation / timeout from when YouTube’s moderation AI flagged a decade old video for having russian parkour.

He celebrated by posting the below under a pipebomb video.

Hey, this is my son. Stop making fun of his school project. At least he worked hard on it. unlike all you little fucks using AI to write essays about books you don’t know how to read. So you can go use AI to get ahead in the workforce until your AI manager fires you for sexually harassing the AI secretary. And then your AI health insurance gets cut off so you die sick and alone in the arms of your AI fuck butler who then immediately cremates you and compresses your ashes into bricks to build more AI data centers. The only way anyone will ever know you existed will be the dozens of AI Studio Ghibli photos you’ve made of yourself in a vain attempt to be included. But all you’ve accomplished is making the price of my RAM go up for a year. You know, just because something is inevitable doesn’t mean it can’t be molded by insults and mockery. And if you depend on AI and its current state for things like moderation, well then fuck you. Also, hey, nice pipe bomb, bro.

good morning awful, I found you the first thing you’ll want to scream at today

palantir and others offering free addictions, all in the name of “productivity”

Zyn Bhuddists

Orthodoxycodone

Seventh Day Add-fent-ists

Freemason Freebasin’

Latter Say Daints

Fat Blunt Gong

Another video on Honey (“The Honey Files Expose Major Fraud!”) - https://www.youtube.com/watch?v=qCGT_CKGgFE

Shame he missed cyber monday by a couple weeks.

Also 16:35 haha ofc it’s just json full of regexes.

They avoid the classic mistake of forgetting to escape

.in the URL regex. I’ve made that mistake before…Like imagine you have a mission critical URL regex telling your code what websites to trust as

https://www.trusted-website.net/.*but then someone comes along and registers the domain namehttps://wwwwtrusted-website.net/. I’m convinced that’s some sort of niche security vulnerability in some existing system but no one has ran into it yet.None of this comment is actually important. The URL regexes just gave me work flashbacks.

a couple weeks back I had a many-rounds support ticket with a network vendor, querying exactly the details of their regex implementation. docs all said PCRE, actual usage attempt indicated….something very much else. and indeed it was because of

.that I found it

CW: Slop, body humor, Minions

So my boys recieved Minion Fart Rifles for Christmas from people who should have known better. The toys are made up of a compact fog machine combined with a vortex gun and a speaker. The fog machine component is fueled by a mixture of glycerin and distilled water that comes in two scented varieties: banana and farts. The guns make tidy little smoke rings that can stably deliver a payload tens of feet in still air.

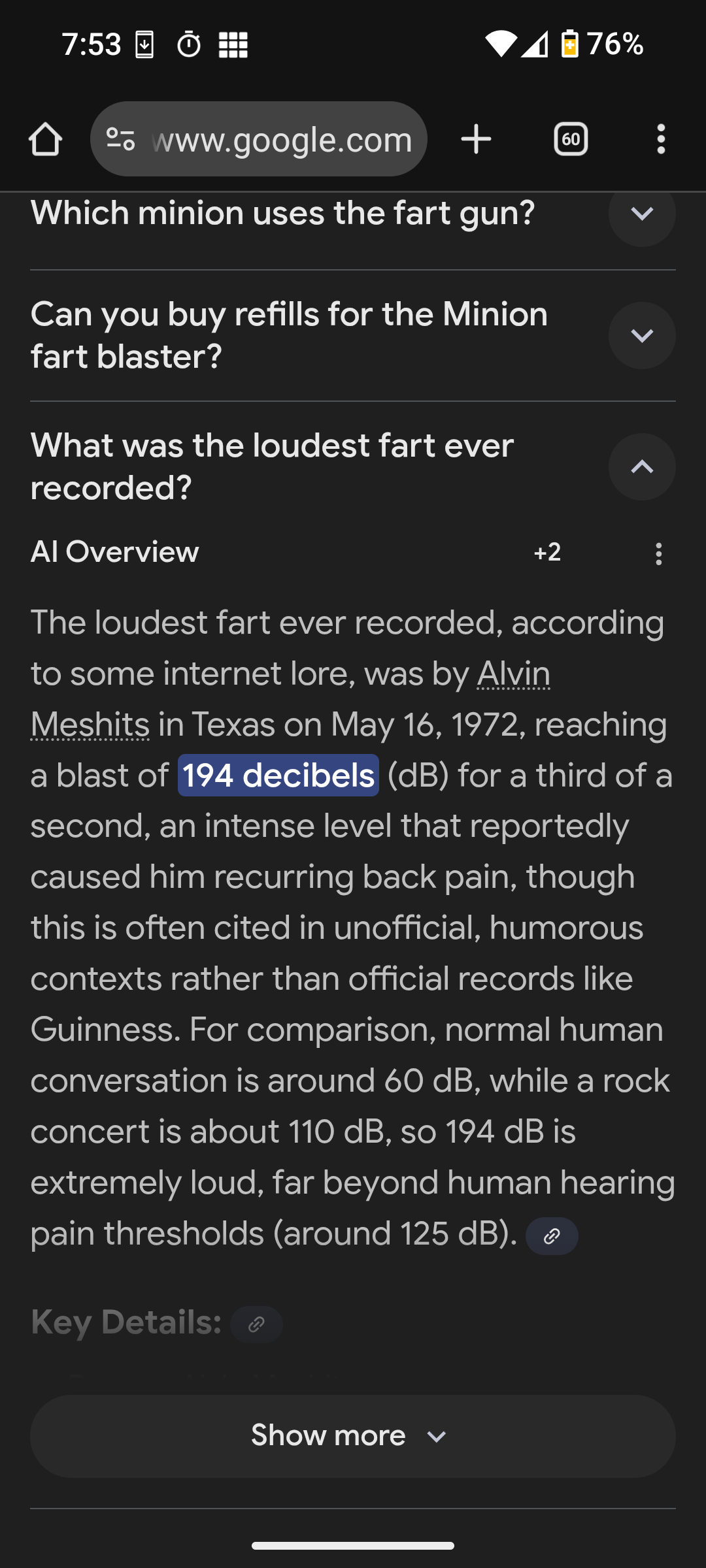

Anyway, as soon as they were fired up, Ammo Anxiety reared its ugly head, so I went in search of a refill recipe. (Note: I searched “Minions Vortex Gun Refill Recipe”) and goog returned this fartifact*:

194 dB, you say? Alvin Meshits? The rabbit hole beckoned.

The “source links” were mostly unrelated except one, which was a reddit thread that lazily cited ChatGPT generating the same text almost verbatim in response to the question, “What was the loudest ever fart?”

Luckily, a bit of detectoring turned up the true source, an ancient Uncyclopedia article’s “Fun Facts” section:

https://en.uncyclopedia.co/wiki/Fartium

The loudest fart ever recorded occurred on May 16, 1972 in Madeline, Texas by Alvin Meshits. The blast maintained a level of 194 decibels for one third of a second. Mr. Meshits now has recurring back pain as a result of this feat.

Welcome to the future!

- yeah I took the bait/I dont know what I expected

That toy sounds like someone took a vape and turned it into a smoke ring launcher. Have you tried filling it with vape juice?

right? lol but I cant it’s too popular with the kiddos

Wouldn’t that be the refill recipe you where looking for? Vape juice is just a mix of propylene glycol and vegetable glycerine. I think its the glycerine that is responsible for the “smoke”.

THC vape juice?

Maybe if we’re lucky, Alvin Meshits can team up wtih https://en.wikipedia.org/wiki/Bum_Farto for the feel-good buddy comedy of the summer. Remember, the more you toot, the better you feel!

I want a vortex ring gun.

Somewhat interestingly, 194 decibels is the loudest that a sound can be physically sustained in the Earth’s atmosphere. At that point the “bottom” of the pressure wave is a vacuum. Some enormous blast such as a huge meteor impact, a supervolcano eruption or a very large nuclear weapon can exceed that limit but only for the initial pulse.

I suspect a 194 dB fart would blow the person in half.

Vacuum-driven total intestinal eversion, nobody’s ever seen anything like it

Apparently there’s another brand that describes its scents as “(rich durian & mellow cheese)”

upvoted for “fartifact”

This is a fun read: https://nesbitt.io/2025/12/27/how-to-ruin-all-of-package-management.html

Starts out strong:

Prediction markets are supposed to be hard to manipulate because manipulation is expensive and the market corrects. This assumes you can’t cheaply manufacture the underlying reality. In package management, you can. The entire npm registry runs on trust and free API calls.

And ends well, too.

The difference is that humans might notice something feels off. A developer might pause at a package with 10,000 stars but three commits and no issues. An AI agent running npm install won’t hesitate. It’s pattern-matching, not evaluating.

the tea.xyz experiment section is exactly describing academic publishing

Foz Meadows brings a lengthy and merciless sneer straight from the heart, aptly-titled “Against AI”

Cory’s talk on 39C3 was fucking glorious: https://media.ccc.de/v/39c3-a-post-american-enshittification-resistant-internet

No notes

lowkey disappointed to see so much slop in other talks (illustrations on slides mostly)

Really? Which ones? I didn’t notice any

so far (in the order i’m watching) the worst offender https://media.ccc.de/v/39c3-a-quick-stop-at-the-hostileshop

Yeaaaaah I saw that on the schedule and decoded to not go, lol.

fella didn’t even introduce himself other than with link to github which might be not his strictly speaking

at least this one https://media.ccc.de/v/39c3-chaos-communication-chemistry-dna-security-systems-based-on-molecular-randomness#t=1112 and the next slide

a chunk of software was vibecoded unless i misunderstand something about it https://media.ccc.de/v/39c3-hacking-washing-machines#t=2629

the talk about water content in soil was bad but the topic itself was interesting

things that fella got wrong: TDM moisture meter works by measuring what effectively is electrical length of waveguide formed by 2 electrodes and soil around it. more water = higher dielectric constant = longer delay for reflection, this only measures as deep as probe goes and the rest is fitted from model

neutron detector works just like geiger tube except gas has large cross-section for reaction with neutrons, that gives charged products that begin a spark which is counted. in train, steel doesn’t interfere but diesel fuel will. the trick is that cosmic neutrons are counted separately from reflected neutrons, because cosmic neutrons are hot and reflected neutrons are thermal and how much of reflected ones is there depends on water content in the soil. helium does not run out. the more helium you have the faster counts go and you can move faster while measuring with decent precision. the lead shield in train is for getting rid of radiation from granite aggregate under rails, because it contains tiny amounts of uranium and gammas from decay chain would add to noise - lead does not interfere