From reading all the comments from the community, it’s amazing (yet not surprising) that all these managers have fallen for the marketing of all these LLMs. These LLMs have gotten people from all levels of society to just accept the marketing without ever considering the actual results for their use cases. It’s almost like the sycophant nature of all LLMs has completely blinded people from being rational just because it is shiny and it spoke to them in a way no one has in years.

On the surface level, LLMs are cool no doubt, they do have some uses. But past that everyone needs to accept their limitations. LLMs by nature can not operate the same as a human brain. AGI is such a long shot because of this and it’s a scam that LLMs are being marketed as AGI. How can we attempt to recreate the human brain into AGI when we are not close to mapping out how our brains work in a way to translate that into code, let alone other more simple brains in the animal kingdom.

I don’t think LLMs will become AGI, but… planes don’t fly by flapping their wings. We don’t necessarily need to know how animal brains work to achieve AGI, and it doesn’t necessarily have to work anything like animal brains. It’s quite possible if/when AGI is achieved, it will be completely alien.

I can’t wait until billionaires realize how worthless they actually are without people doing everything for them

Eh, as the world goes to shit there will always be desperate people willing to work for them, probably cheaper than before even with the AI failures, so they wouldn’t care.

They will never realize that, they will blame any failures on others naturally. They truly believe they are better than everyone else, that their superior ability led them to invest in a company that increased in value enough for them to become filthy rich.

Surrounded by yes men and woman that agree with everything they say and tell them what a genius they are. Of course any ill outcome isn’t their fault.

Wouldn’t hold my breath for it.

I had a meeting with my boss today about my AI usage. I said I tried using Claude 4.5, and I was ultimately unimpressed with the results, the code was heavy and inflexible. He assured me Claude 4.6 would solve that problem. I pointed out that I am already writing software faster than the rest of the team can review because we are short staffed. He suggested I use Claude to review my MRs.

One big problem with management is their inability to listen. Folks say shit over and over but management seems deaf because we’re not people to be listened to. We’re the help. And management acts like they know better.

If you were so smart you’d have wads of cash like them. They got where they are through sheer grit and bootstraps and a paltry $50 million from their family.

next time, tell your boss that Claude should replace him, not you.

Ralph Wiggum loop that shit

Numbers go up, Claude won’t bother you 👍🏻

The trick is to tell them you’ve been using it more than they have and that it’s not as good as chatGPT for task A, but that for task B claude does okay 25% of the time so we’ll need to 4x the timeline in order to get a good claude output based on that expected value.

But not as good as your personal local LLM that you’ve been training on company data. No one else can use it because it’s illegal to clone. (your personal local LLM is your brain)

Telling your boss you trained a personal LLM with company data will lead to nothing but you holding a box full of your stuff about 10 minutes later.

Yeah, and that’s possible even if they take it for a joke that it is (or isn’t)

“Top-down mandates to use large language models are crazy,” one employee told Wired. “If the tool were good, we’d all just use it.”

Yep.

Management is often out of touch and full of shit

Management: “No, that doesn’t work, because employees spend so much time doing the actual work that they lack the vision to know what’s good for them. Luckily for them I am not distracted by actual work so I have the vision to save them by making them use AI.”

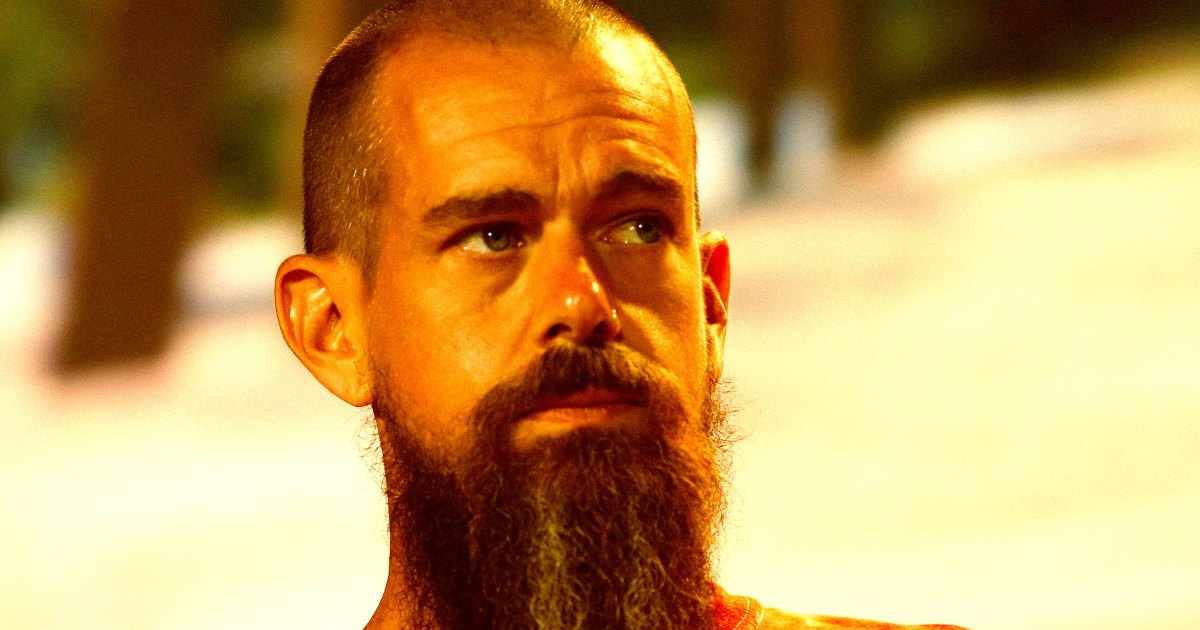

Something an idiot would say. Jack Dorsey is precisely this type of idiot. He’s not the only idiot, though

You wanna know who really bags on LLMs? Actual AI developers. I work with some, and you’ve never heard someone shit all over this garbage like someone who works with neural networks for a living.

our company renamed out ML team into AI team, just to please investors, they been around for over a decade and never touched an LLM

There’s this great rage blog post from 1.5 years ago by a data scientist

Oh, the guy from Hermit Tech! He’s great, and his blog is hilarious and poignant in turns (though sometimes both at the same time).

Missed this one, thanks

That’s me, but for QA…

At work today we had a little presentation about Claude Cowork. And I learned someone used it to write a C (maybe C++?) compiler in Rust in two weeks at a cost of $20k and it passed 99% of whatever hell test suite they use for evaluating compilers. And I had a few thoughts.

- 99% pass rate? Maybe that’s super impressive because it’s a stress test, but if 1% of my code fails to compile I think I’d be in deep shit.

- 20k in two weeks is a heavy burn. Imagine if what it wrote was… garbage.

- “Write a compiler” is a complete project plan in three words. Find a business project that is that simple and I’ll show you software that is cheaper to buy than build. We are currently working on an authentication broker service at work and we’ve been doing architecture and trying to get everyone to agree on a design for 2 months. There are thousands of words devoted to just the high level stuff, plus complex flow diagrams.

- A compiler might be somewhat unique in the sense that there are literally thousands of test cases available - download a foss project and try to compile it. If it fails, figure out the bug and fix it. Repeat. The ERP that your boss wants you to stand up in a month has zero test coverage and is going to be chock full of bugs — if for no other reason than you haven’t thought through every single edge case and neither has the AI because lots of times those are business questions.

- There is not a single person who knows the code base well enough to troubleshoot any weird bugs and transient errors.

I think this is a cool thing in the abstract. But in reality, they cherry picked the best possible use case in the world and anyone expecting their custom project is going to go like this will be lighting huge piles of money on fire.

99% pass rate? Maybe that’s super impressive because it’s a stress test, but if 1% of my code fails to compile I think I’d be in deep shit.

Also - one of the main arguments of vibe coding advocators is that you just need to check the result several times and tell the AI assistant what needs fixing. Isn’t a compiler test suite ideal for such workflow? Why couldn’t they just feed the test failures back to the model and tell it to fix them, iterating again and again until they get it to work 100%?

Maybe they did, that’s how they got to 99%. The remaining issues are so intricate/complex the LLM just can’t solve them no matter how many test cases you give it.

https://harshanu.space/en/tech/ccc-vs-gcc/ has a good overview how bad it really is

Thank you. Great addition. That was a very interesting read, though I need to be more awake for reading technical writing like that 🥱.

My point about spending $20k to produce garbage, then, was actually realized in this “perfect” use case.

That was interesting to read if not a bit jargon heavy. Thanks for sharing

It’s even simpler than that: using an LLM to write a C compiler is the same as downloading an existing open source implementation of a C compiler from the Internet, but with extra steps, as the LLM was actually fed with that code and is just re-assembling it back together but with extra bugs - plagiarism hidden behind an automated text parrot interface.

A human can beat the LLM at that by simply finding and downloading an implementation of that more than solved problem from the Internet, which at worse will take maybe 1h.

The LLM can “solve” simple and well defined problems because its basically plagiarizing existing code that solves those problems.

Hey, so I started this comment to disagree with you and correct some common misunderstandings that I’ve been fighting against for years. Instead, as I was formulating my response, I realized you’re substantially right and I’ve been wrong — or at least my thinking was incomplete. I figured I’d mention because the common perception is arguing with strangers on the internet never accomplishes anything.

LLMs are not fundamentally the plagiarism machines everyone claims they are. If a model reproduces any substantial text verbatim, it’s because the LLM is overtrained on too small of a data set and the solution is, somewhat paradoxically, to feed it more relevant text. That has been the crux of my argument for years.

That being said, Anthropic and OpenAI aren’t just LLM models. They are backed by RAG pipelines which are verbatim text that gets inserted into the context when it is relevant to the task at hand. And that fact had been escaping my consideration until now. Thank you.

Even the LLM part might be considered Plagiarism.

Basically, unlike humans it cannot assemble an output based on logical principles (i.e. assembled a logical model of the flows in a piece of code and then translate it to code), it can only produce text based on an N-space of probabilities derived from the works of others it has “read” (i.e. fed to it during training).

That text assembling could be the machine equivalent of Inspiration (such as how most programmers will include elements they’ve seen from others in their code) but it could also be Plagiarism.

Ultimately it boils down to were the boundary between Inspiration and Plagiarism stands.

As I see it, if for specific tasks there is overwhelming dominance of trained weights from a handful of works (which, one would expect, would probably be the case for a C-compiler coded in Rust), then that’s a lot more towards the Plagiarism side than the Inspiration side.

Granted, it’s not the verbatim copying of an entire codebase that would legally been deemed Plagiarism, but if it’s almost entirely a montage made up of pieces from a handful of codebases, could it not be considered a variant of Plagiarism that is incredibly hard for humans to pull off but not so for an automated system?

Note that obviously the LLM has no “intention to copy”, since it has no will or cognition at all, what I’m saying is that the people who made it have intentionally made an automated system that copies elements of existing works, which normally assembles the results from very small textual elements (same as a person who has learned how letters and words work can create a unique work from letters and words) but with the awareness that in some situations that automated system they created can produce output based on an amount of sources which is very low to the point that even though it’s assembling the output token by token, it’s pretty much just copying whole blocks from those sources same as a human manually copying a text from a document to a different document would.

In summary, IMHO LLMs don’t always plagiarize, but can sometimes do it when the number of sources that ended up creating the volume of the N-dimensional probabilistic space the LLM is following for that output is very low.

I agree with you on a technical level. I still think LLMs are transformative of the original text and if

when the number of sources that’s what ultimately created the volume of the N-dimensional probabilistic space they’re following is very low.

then the solution is to feed it even more relevant data. But I appreciate your perspective. I still disagree, but I respect your point of view.

I’ll give what you’ve written some more thought and maybe respond in greater depth later but I’m getting pulled away. Just wanted to say thanks for the detailed and thorough response.

I would be interested in knowing what language it was for sure, as there is a huge difference between a C and a C++ compiler in terms of complexity

I just posted where I found the source in another comment. It would have probably the information you’re interested in.

I think this is the reported https://github.com/anthropics/claudes-c-compiler.

And here’s a pretty good article about it https://arstechnica.com/ai/2026/02/sixteen-claude-ai-agents-working-together-created-a-new-c-compiler/

Also, software development is already the best possible use case for LLMs: you need to build something abiding by a set of rules (as in a literal language, lmao), and you can immediately test if it works.

In e.g. a legal use case instead, you can jerk off to the confident sounding text you generated, then you get chewed out by the judge for having hallucinated references. Even if you have a set of rules (laws) as a guardrails, you cannot immediately test what the AI generated - and if an expert needs to read and check everything in detail, then why not just do it themselves in the same amount of time.

We can go on to business, where the rules the AI can work inside are much looser, or healthcare, where the cost of failure is extremely high. And we are not even talking about responsibilities, official accountability for decisions.

I just don’t think what is claimed for AI is there. Maybe it will be, but I don’t see it as an organic continuation of the path we’re in. We might have another dot com boom when investors realize this - LLMs will be here to stay (same as the internet did), but they will not become AGI.

I also often get assigned projects where all the tests are written out beforehand and I can look at an existing implementation while I work…

A C compiler in two weeks is a difficult, but doable, grad school class project (especially if you use

lexandyaccinstead of hand-coding the parser). And I guarantee 80 hours of grad student time costs less than $20k.Frankly, I’m not impressed with the presentation in your anecdote at all.

Here is the original cite that my company pulled that from if you want more details.

I’ve never written a compiler, nor in Rust, so I have no idea the effort involved. I’m just boggling over the price tag. I’ll bet that’s the cost of an entire offshore team.

Yeah, the thing also has limited scope and requires some meddling to point to necessary includes as evidenced by the first issue, afair. And the code produced is subpar I heard

Agree with all points. Additionally, compilers are also incredibly well specified via ISO standards etc, and have multiple open source codebases available, eg GCC which is available in multiple builds and implementations for different versions of C and C++, and DQNEO/cc.go.

So there are many fully-functional and complete sources that Claude Cowork would have pulled routines and code from.

The vibe coded compiler is likely unmaintainable, so it can’t be updated when the spec changes even assuming it did work and was real. So you’d have to redo the entire thing. It’s silly.

Updates? You just vibecode a new compiler that follows the new spec

“I want to add a command line option that auto generates helloworld.exe”

“That’ll be $21,000.”

Don’t forget that there are tons of C compilers in the dataset already

Man, corporate layoffs kill productivity completely for me.

Once you do layoffs >50% of the job becomes performative bullshit to show you’re worth keeping, instead of building things the company actually needs to function and compete.

And the layoffs are random with a side helping of execs saving the people they have face time with.

Who?

The original creator of Twitter and now creator of Bluesky and whatever this thing that’s falling off the rails is.

Basically another billionaire living in his own little bubble and huffing his own farts too much.

he left Bluesky around 2 years ago

That must be why they are doing okay, haha.

He also had a lot to do with Nostr, early on.

Jack Dorsey, has endorsed and financially supported the development of Nostr by donating approximately $250,000 worth of Bitcoin to the developers of the project in 2023,[13][15] as well as a $10 million cash donation to a Nostr development collective in 2025.

What?

Oops I mistread my source. Have updated my comment.

Is that thumbnail a scene from 12 monkeys?

Naw. This is clearly just 1 monkey.

pffft, you give him too much credit.

Right before he dies, yeah

Uhhh, Block is the the parent company of Square (formerly known as Square Up). This is actually a huge company, not some little side thing.

Gonna need a longer beard.